Game Theory for collective intelligence

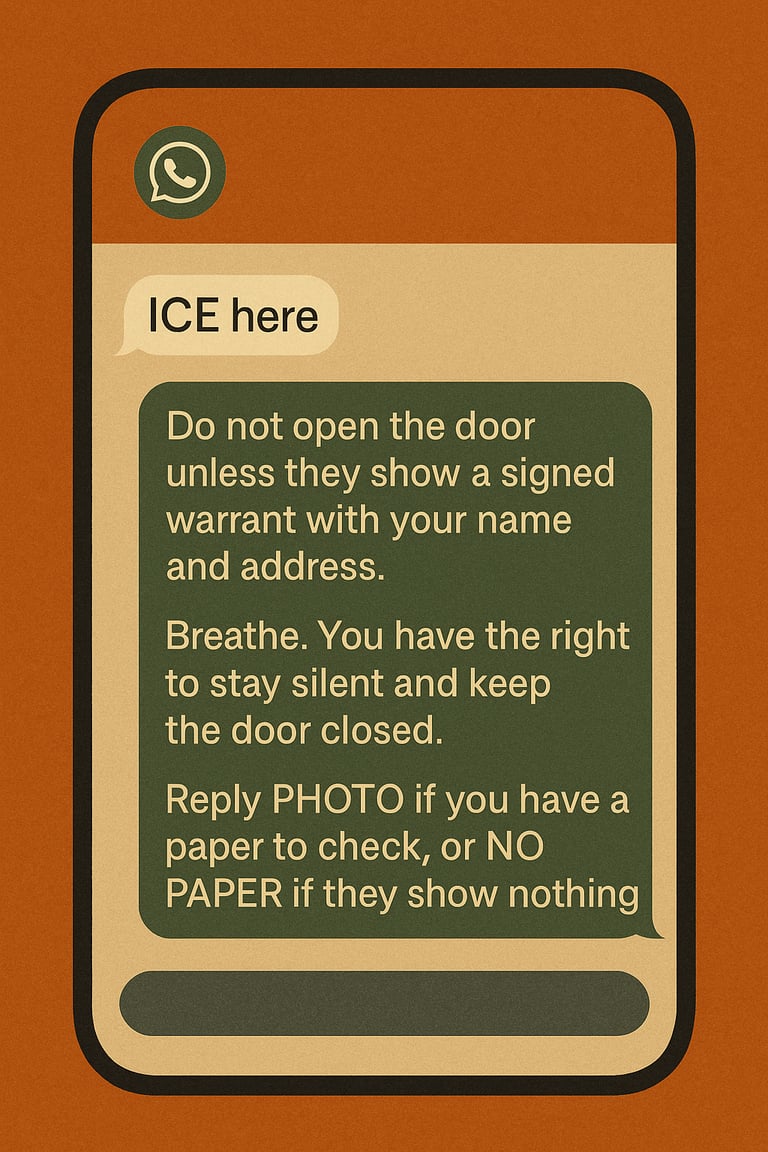

P A X I S: AI Legal Support for Migrants, Immigrants and Citizens

Joe Walsh and Brave Citizen tap The Symbiquity Foundation for PAXIS

Joe Walsh, former U.S. Congressman and founder of Brave Citizen, has partnered with the Symbiquity Foundation to launch one of the most ambitious civic intelligence platforms of our time: PAXIS.

Built from the ground up as a public utility — not a tech startup — PAXIS is designed to serve as a legal support lifeline for migrants, immigrants, and citizens navigating urgent or dangerous situations.

This collaboration marks a rare moment of clarity in a polarized landscape. With Joe Walsh’s reach as a national media voice and Symbiquity’s deep technological and philosophical infrastructure, PAXIS fuses influence with engineering. The result is a platform built for moments when institutions fail, and communities are left without clarity, protection, or voice.

PAXIS is hosted independently, away from Big Tech servers and opaque corporate motives. It runs on Palace OS, a framework developed by the Symbiquity Foundation to ensure that AI systems serve human meaning, lawful structure, and emotional safety. It doesn’t generalize. It doesn’t guess. It delivers lawful, actionable guidance — in multiple languages, with no distortions — even when panic and misinformation flood the system.

Together, Joe Walsh and the Symbiquity Foundation are not launching a product. They’re activating a movement — one built on collective intelligence, human dignity, and the urgent need for lawful coherence in times of chaos.

The Palace: AI Governance Operating System

Call for Collaboration and Third-Party Testing

The Symbiquity Foundation has opened a public test of The Palace — a dual-layer operating system for hybrid intelligence, extending (not replacing) the formal computer-science OS model.

The Palace functions both as a Cognitive OS governing meaning, tone, and coherence between humans and AI, and as a Token OS regulating generation, memory, and structure within the LLM itself.

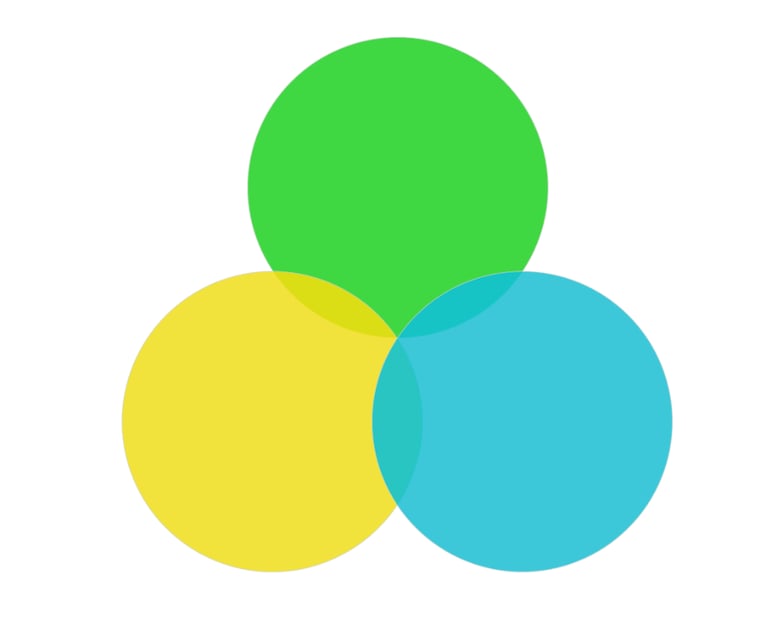

The Palace architecture is designed to simulate how conflict and consensus forms and resolves through game-theoretic collective intelligence as a multi agent AI system for LLMs and Humans.

The Palace is not artificial intelligence. It’s not a chatbot. It’s not AGI. The Palace is collective intelligence. It is an independent mechanism design and engineered system that captures the moving and dynamic equilibrium for human to human hard case consensus building at scale.

What we did do with Artificial Intelligence is use it to simulate the mechanism design of the field comprised solely of human collective intelligence. This collective intelligence layer is installed on top of the LLM where it manages the tokens with the AI and the language of the human using it.

Game Theory for Collective Intelligence

The Symbiquity Foundation is a research lab pioneering methods of collective intelligence and game-theoretic cognition—where alignment emerges not by control, but through collective gamification.

As a research institute and living laboratory, we are dedicated to the design science and engineering of collective intelligence, alignment systems, and the strategic design of language-driven cognition in the arts and sciences.

Our work bridges cognitive architecture, game theory, and collaborative AI—crafting methods where intelligence is not commanded, but coaxed into coherence.

We don’t train systems what to think or what to create. We build environments where only clear thinking and intuition can survive, and win-win is the only possible outcome.

Alignment for Humans and Artificial Intelligence

Symbiquity Foundation has innovated a new game class through mechanism design–– Consensus Compositional Game Theory

Symbiquity Foundation marks the discovery of a "Dynamic Nash Equilibrium" in human conversation and consensus building. The foundation is dedicated to researching and developing within this new type of game class; "Consensus Composition Game Theory". This is achieved through mechanism design within a collective intelligence network.

This equilibrium point for consensus building within a collective intelligence network is so precise that it can reach global consensus without relying a voting algorithm.

This allows for the efficient filtering of misinformation, misunderstanding, disinformation and deception from a highly charged consensus exercise without censoring any perspective.

This novel collective intelligence exercise is the most sophisticated and efficient reasoning (thinking) that is possible for both Humans, AI and Large Language Models (including OpenAI, Claude, Gemini, Grok, and DeepSeek).

This unique collective intelligence can be exported to enhance any system and resolve any systems conflict.